Risk-First Analysis Framework

Start Here

Home

Contributing

Quick Summary

A Simple Scenario

The Risk Landscape

Discuss

Please star this project in GitHub to be invited to join the Risk First Organisation.

Publications

Click Here For Details

Agency Risk

Coordinating a team is difficult enough when everyone on the team has a single Goal. But, people have their own goals, too. Sometimes, their goals harmlessly co-exist with the team’s goal, but other times they don’t.

This is Agency Risk. This term comes from finance and refers to the situation where you (the “principal”) entrust your money to someone (the “agent”) in order to invest it, but they don’t necessarily have your best interests at heart. They may instead elect to invest the money in ways that help them, or outright steal it.

“This dilemma exists in circumstances where agents are motivated to act in their own best interests, which are contrary to those of their principals, and is an example of moral hazard.” - Principal-Agent Problem, Wikipedia

The less visibility you have of the agent’s activities, the bigger the risk. However, the whole point of giving the money to the agent was that you would have to spend less time and effort managing it, hence the dilemma. In software development, we’re not lending each other money, but we are being paid by the project sponsor, so they are assuming Agency Risk by employing us.

As we saw in the previous section on Process Risk, Agency Risk doesn’t just apply to people: it can apply to running software or whole teams - anything which has agency over its actions.

“Agency is the capacity of an actor to act in a given environment… Agency may either be classified as unconscious, involuntary behaviour, or purposeful, goal directed activity (intentional action). “ - Agency, Wikipedia

Agency Risk clearly includes the behaviour of Bad Actors but is not limited to them: there are various “shades of grey” involved. So first, we will look at some examples of Agency Risk, in order to sketch out where the domain of this risk lies, before looking at three common ways to mitigate it: monitoring, security and goal alignment.

Personal Lives

We shouldn’t expect people on a project to sacrifice their personal lives for the success of the project, right? Except that “Crunch Time” is exactly how some software companies work:

“Game development… requires long working hours and dedication from their employees. Some video game developers (such as Electronic Arts) have been accused of the excessive invocation of “crunch time”. “Crunch time” is the point at which the team is thought to be failing to achieve milestones needed to launch a game on schedule. “ - Crunch Time, Wikipedia

People taking time off, going to funerals, looking after sick relatives and so on are all acceptable forms of Agency Risk. They are the Attendant Risk of having staff rather than slaves.

The Hero

“The one who stays later than the others is a hero. “ - Hero Culture, Ward’s Wiki

Heroes put in more hours and try to rescue projects single-handedly, often cutting corners like team communication and process in order to get there.

Sometimes, projects don’t get done without heroes. But other times, the hero has an alternative agenda to just getting the project done:

- A need for control, and for their own vision.

- A preference to work alone.

- A desire for recognition and acclaim from colleagues.

- For the job security of being a Key Person.

A team can make use of heroism, but it’s a double-edged sword. The hero can becomes a bottleneck to work getting done and because they want to solve all the problems themselves, they under-communicate.

CV Building

CV Building is when someone decides that the project needs a dose of “Some Technology X”, but in actual fact, this is either completely unhelpful to the project (incurring large amounts of Complexity Risk), or merely a poor alternative to something else.

It’s very easy to spot CV building: look for choices of technology that are incongruently complex compared to the problem they solve, and then challenge by suggesting a simpler alternative.

Devil Makes Work

Heroes can be useful, but underused project members are a nightmare. The problem is, people who are not fully occupied begin to worry that actually, the team would be better off without them, and then wonder if their jobs are at risk.

Even if they don’t worry about their jobs, sometimes they need ways to stave off boredom. The solution to this is “busy-work”: finding tasks that, at first sight, look useful, and then delivering them in an over-elaborate way (Gold Plating) that’ll keep them occupied. This will leave you with more Complexity Risk than you had in the first place.

Pet Projects

A project, activity or goal pursued as a personal favourite, rather than because it is generally accepted as necessary or important. - Pet Project, Wiktionary

Sometimes, budget-holders have projects they value more than others without reference to the value placed on them by the business. Perhaps the project has a goal that aligns closely with the budget holder’s passions, or its related to work they were previously responsible for.

Working on a pet project usually means you get lots of attention (and more than enough budget), but it can fall apart very quickly under scrutiny.

Morale Risk

Morale, also known as Esprit de Corps is the capacity of a group’s members to retain belief in an institution or goal, particularly in the face of opposition or hardship - Morale, Wikipedia

Sometimes, the morale of the team or individuals within it dips, leading to lack of motivation. Morale Risk is a kind of Agency Risk because it really means that a team member or the whole team isn’t committed to the Goal, may decide their efforts are best spent elsewhere. Morale Risk might be caused by:

- External Factors: Perhaps the employees’ dog has died, or they’re simply tired of the industry, or are not feeling challenged.

- The goal feels unachievable: in this case, people won’t commit their full effort to it. This might be due to to a difference in the evaluation of the risks on the project between the team members and the leader. In military science, a second meaning of morale is how well supplied and equipped a unit is. This would also seem like a useful reference point for IT projects. If teams are under-staffed or under-equipped, this will impact on motivation too.

- The goal isn’t sufficiently worthy, or the team doesn’t feel sufficiently valued.

Software Processes

There is significant Agency Risk in running software at all. Since computer systems follow rules we set for them, we shouldn’t be surprised when those rules have exceptions that lead to disaster. For example:

- A process continually writing log files until the disks fill up, crashing the system.

- Bugs causing data to get corrupted, causing financial loss.

- Malware exploiting weaknesses in a system, exposing sensitive data.

Teams

Agency Risk applies to whole teams too. It’s perfectly possible that a team within an organisation develops Goals that don’t align with those of the overall organisation. For example:

- A team introduces excessive Bureaucracy in order to avoid work it doesn’t like.

- A team gets obsessed with a particular technology, or their own internal process improvement, at the expense of delivering business value.

- A marginalised team forces their services on other teams in the name of “consistency”. (This can happen a lot with “Architecture”, “Branding” and “Testing” teams, sometimes for the better, sometimes for the worse.)

When you work with an external consultancy, there is always more Agency Risk than with a direct employee. This is because as well as your goals and the employee’s goals, there is also the consultancy’s goals.

This is a good argument for avoiding consultancies, but sometimes the technical expertise they bring can outweigh this risk.

Mitigating Agency Risk

Let’s look at three common ways to mitigate Agency Risk: Monitoring, Security. and Goal Alignment. Let’s start with Monitoring.

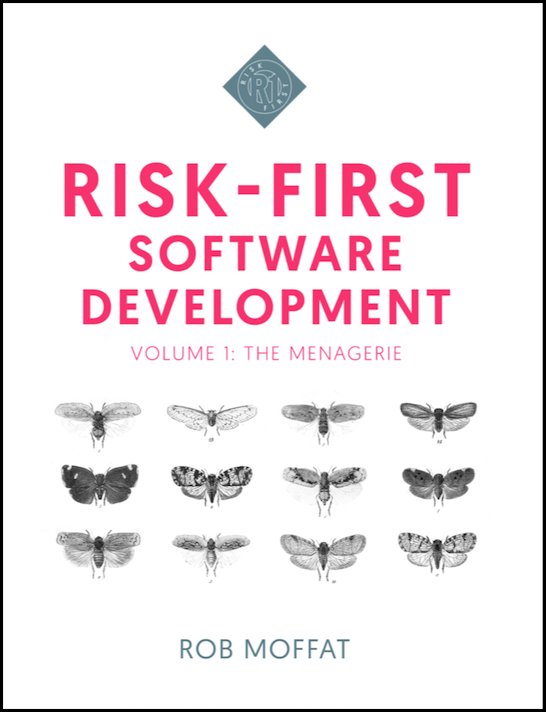

Monitoring

A the core of the Principal-Agent Problem is the issue that we want our agents to do work for us so we don’t have the responsibility of doing it ourselves. However, we pick up the second-order responsibility of managing the agents instead.

As a result (and as shown in the above diagram), we need to Monitor the agents. The price of mitigating Agency Risk this way is that we have to spend time doing the monitoring (Schedule Risk) and we have to understand what the agents are doing (Complexity Risk).

Monitoring of software process agents is an important part of designing reliable systems and it makes perfect sense that this would also apply to human agents too. But for people, the knowledge of being monitored can instil corrective behaviour. This is known as the Hawthorne Effect:

“The Hawthorne effect (also referred to as the observer effect) is a type of reactivity in which individuals modify an aspect of their behaviour in response to their awareness of being observed.” - Hawthorne Effect, Wikipedia

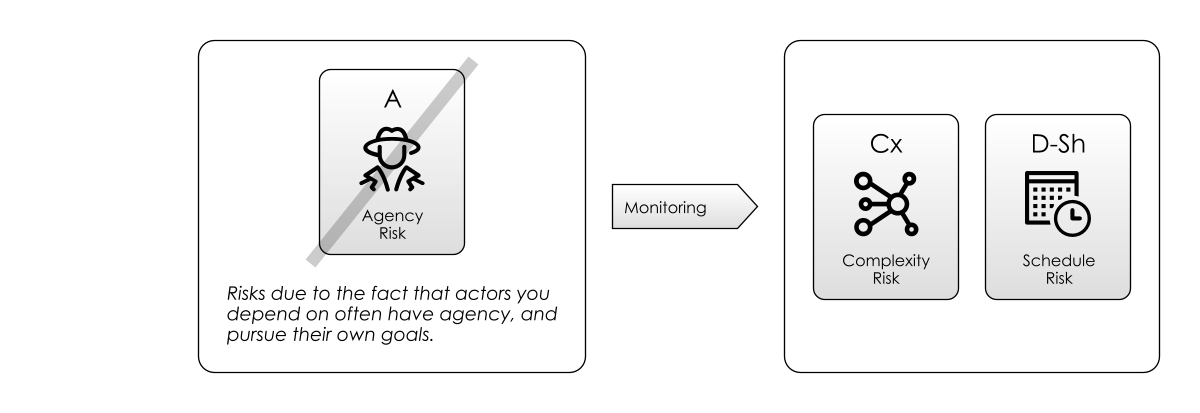

Security

Security is all about setting limits on agency - both within and outside a system.

Within a system we may wish to prevent our agents from causing accidental (or deliberate) harm, but we also have Agency Risk from unwanted agents outside the system. So security is also about ensuring that the environment we work in is safe for the good actors to operate in, while keeping out the bad actors.

Interestingly, security is handled in very similar ways in all kinds of systems, whether biological, human or institutional:

- Walls: defences around the system, to protect its parts from the external environment.

- Doors: ways to get in and out of the system, possibly with locks.

- Guards: to make sure only the right things go in and out. (i.e. to try and keep out bad actors).

- Police: to defend from within the system, against internal Agency Risk.

- Subterfuge: hiding, camouflage, disguises, pretending to be something else.

These work various levels in our own bodies: our cells have cell walls around them, and cell membranes that act as the guards to allow things in and out. Our bodies have skin to keep the world out, and we have mouths, eyes, pores and so on to allow things in and out. We have an immune system to act as the police.

Our societies work in similar ways: in medieval times, a city would have walls, guards and gates to keep out intruders. Nowadays, we have customs control, borders and passports.

We’re waking up to the realisation that our software systems need to work the same way: we have Firewalls and we lock down ports on servers to ensure there are the minimum number of doors to guard. We police the servers with monitoring tools and we guard access using passwords and other identification approaches.

Agency Risk and Security Risk thrive on complexity: the more complex the systems we create, the more opportunities there are for bad actors to insert themselves and extract their own value. The dilemma is, increasing security also means increasing Complexity Risk, because secure systems are necessarily more complex than insecure ones.

Goal Alignment

As we stated at the beginning, Agency Risk at any level comes down to differences of Goals between the different agents, whether they are people, teams or software.

So, if you can align the goals of the agents involved, you can mitigate Agency Risk. Nassim Nicholas Taleb calls this “skin in the game”: that is, the agent is exposed to the same risks as the principal.

“Which brings us to the largest fragilizer of society, and greatest generator of crises, absence of ‘skin in the game.’ Some become antifragile at the expense of others by getting the upside (or gains) from volatility, variations, and disorder and exposing others to the downside risks of losses or harm.” - Nassim Nicholas Taleb, Antifragile

This kind of financial exposure isn’t very common on software projects. Fixed Price Contracts and Employee Stock Options are two exceptions. But David McClelland’s Needs Theory suggests that there are other kinds of skin-in-the-game: the intrinsic interest in the work being done, or extrinsic factors such as the recognition, achievement, or personal growth derived from it.

“Need theory… proposed by psychologist David McClelland, is a motivational model that attempts to explain how the needs for achievement, power, and affiliation affect the actions of people from a managerial context… McClelland stated that we all have these three types of motivation regardless of age, sex, race, or culture. The type of motivation by which each individual is driven derives from their life experiences and the opinions of their culture. “ - Need Theory, Wikipedia

So one mitigation for Agency Risk is therefore to employ these extrinsic factors. For example, by making individuals responsible and rewarded for the success or failure of projects, we can align their personal motivations with those of the project.

“One key to success in a mission is establishing clear lines of blame.” - Henshaw’s Law, Akin’s Laws Of Spacecraft Design

But extrinsic motivation is a complex, difficult-to-apply tool. In Map And Territory Risk we will come back to this and look at the various ways in which it can go awry.

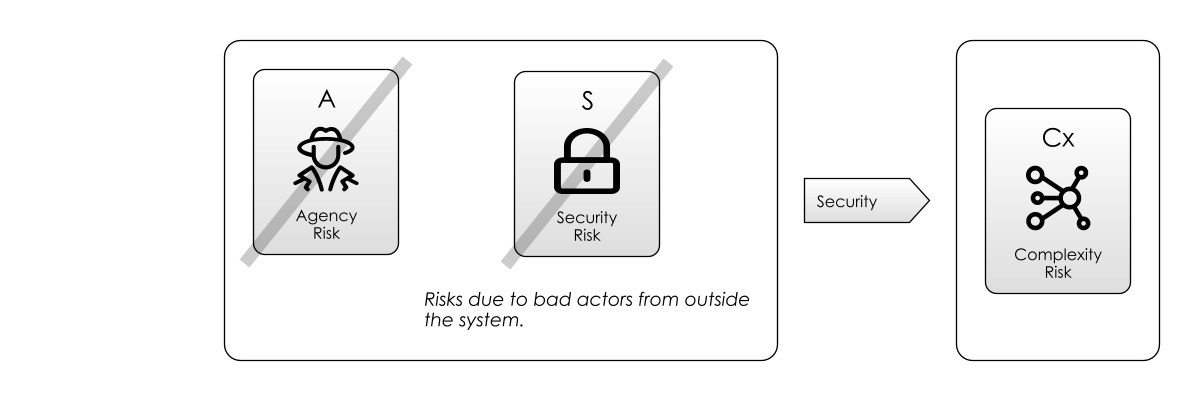

Tools like Pair Programming and Collective Code Ownership are about mitigating Staff Risks like Key Person Risk and Learning Curve Risk, but these push in the opposite direction to individual responsibility.

This is an important consideration: in adopting those tools, you are necessarily setting aside certain tools to manage Agency Risk as a result.

We’ve looked at various different shades of Agency Risk and three different mitigations for it. Agency Risk is a concern at the level of individual agents, whether they are processes, people, systems or teams.

So having looked at agents individually, it’s time to look more closely at Goals, and the Attendant Risks when aligning them amongst multiple agents.

On to Coordination Risk…